Picsellia platform structure

1. Global platform structure

In order to ease data scientists' lives and navigation across all the features of Picsellia, the platform has been divided into three main parts that handle each step of any Computer Vision project life cycle.

- Data Management

- Data Science

- Model Operations

2. Data Management

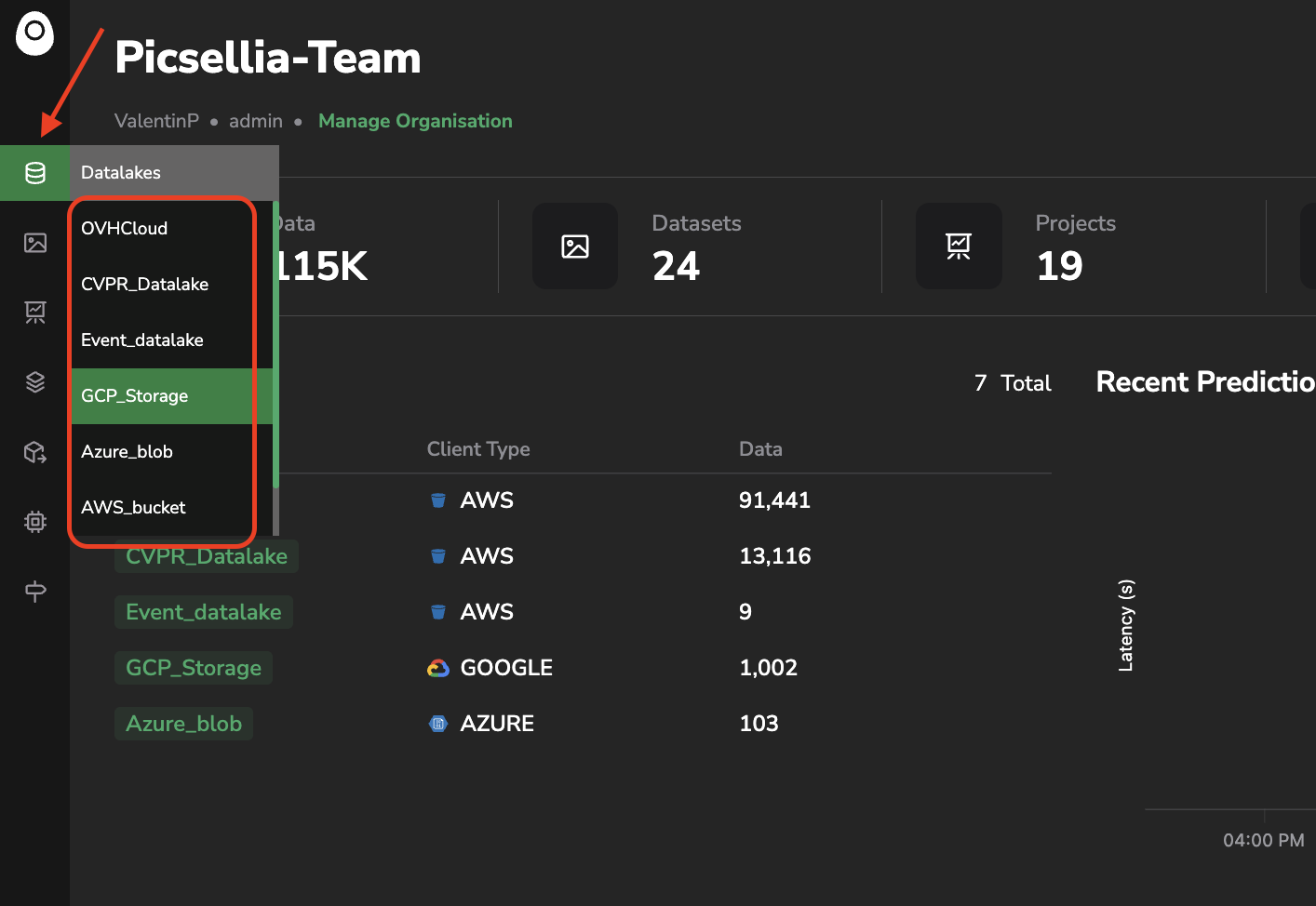

In this first main part of Picsellia, the purpose is to upload your raw images and at the end get the ideal Dataset. Data management is composed of two main features which are DatalakeandDataset available on top of the left sidebar.

Through the Datalake you will gather & organize all your Data in a single and shared place.

Datalake

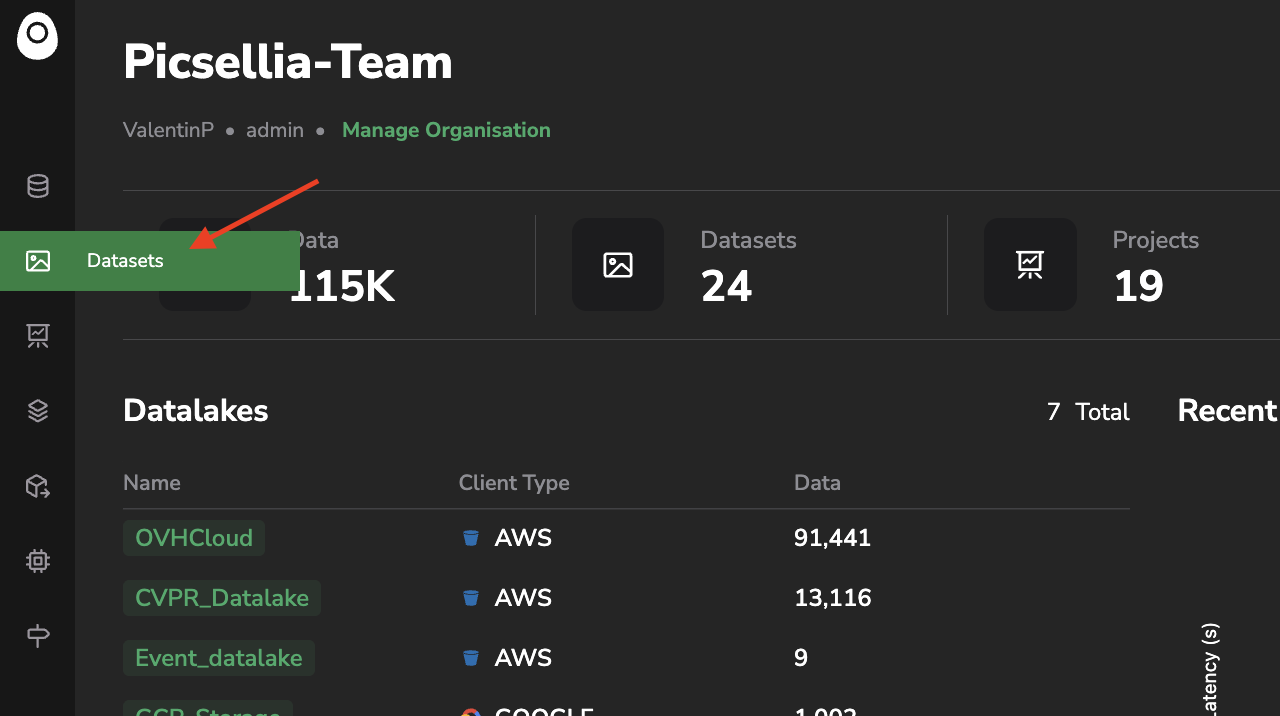

Using Datalake subsets, you will then be able to create your Datasets. Datasets management features will allow you to version, annotate, and process your different Datasets.

Datasets

3. Data Science

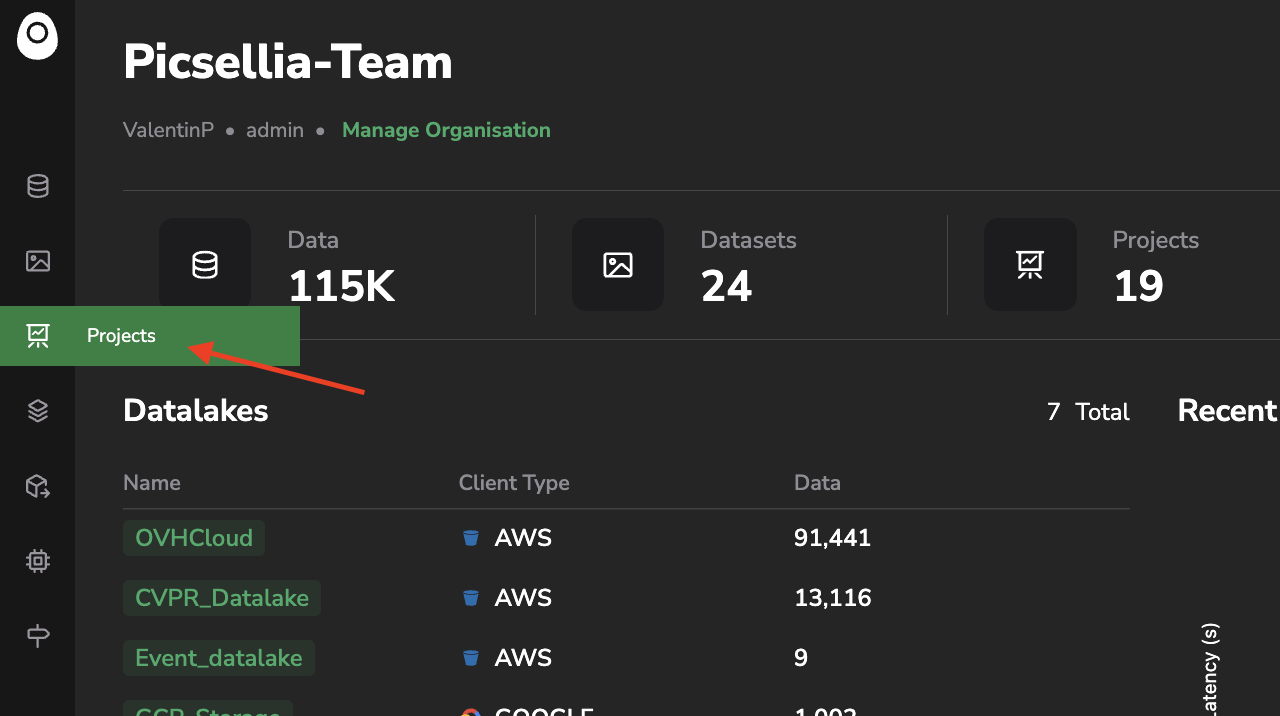

The Data Science part of Picsellia is mainly related to experiment tracking.

Under the Projects feature available on the left sidebar, you'll retrieve all your different Data Science projects.

Projects

Each Project is composed of one or several Experiments. After Experiment creation, you'll be able to launch the training of yourModel, assess the quality of the training through the Experiment Tracking dashboard, and evaluate the Model performances in the evaluation interface.

4. Model Operations

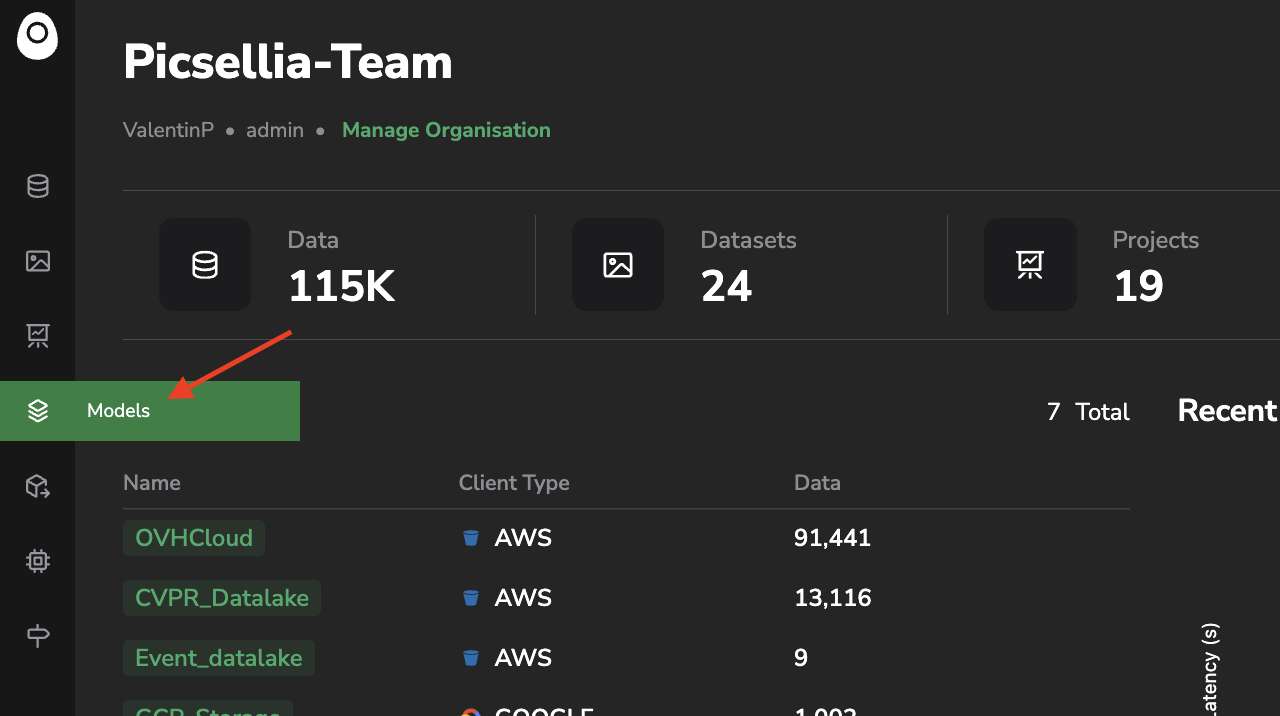

Model Operations features are used to operate a ready-to-deploy Models created with Picsellia or not.

All the Modelsare stored and versioned in your privateRegistry , from here, you can deploy them on Picsellia serving infra or on your own infrastructure.

Models

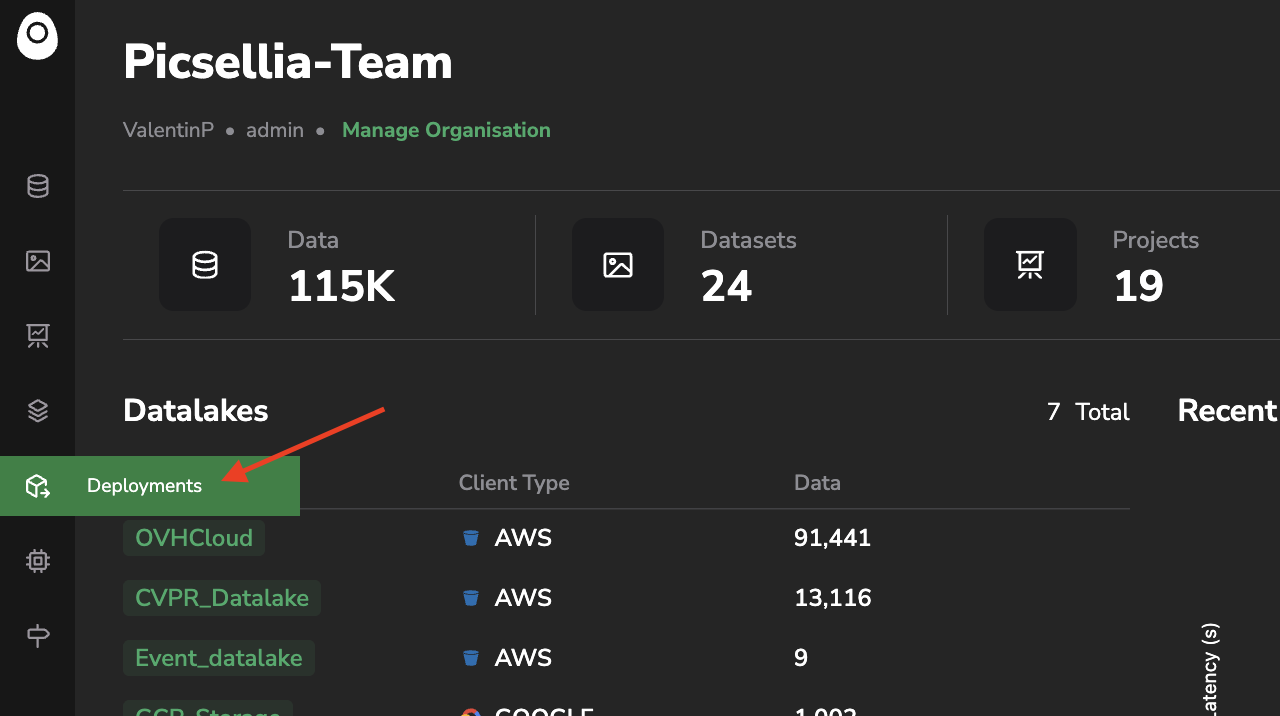

When deployed, keep trace and monitor your models in the Monitoring Dashboard accessible from the Deployment tab, also available in the left sidebar.

Deployments

Create your pipelinesFrom each

Deployment, you can set your data pipeline up in order to retrain & redeploy continuously yourModels.

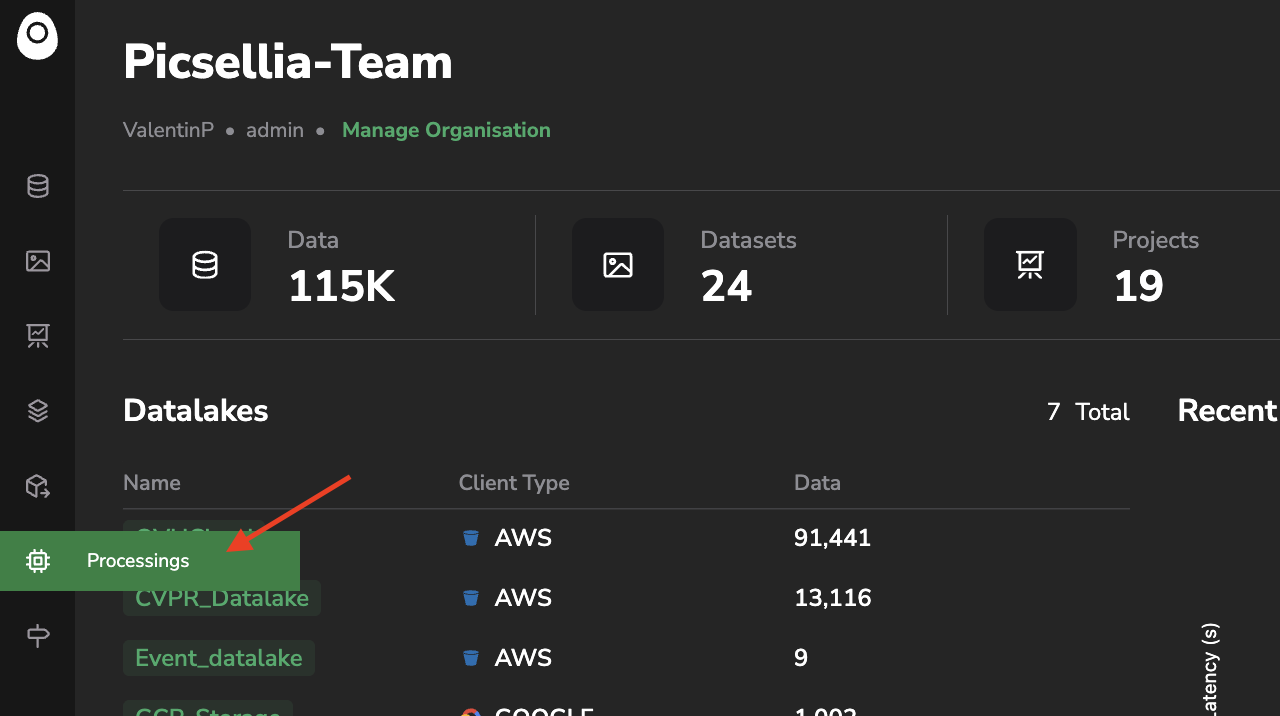

5. Processings

Processings allows you to embed your own scripts and execute them direct on your different Picsellia objects, to create a Processing you simply need to Dockerize any script and push it on a container registry.

Processings can be used on Datalake, DatasetVersion or ModelVersion, they can be used for many different use-cases such as Anonymization, Auto-tagging, Pre-annotation, Image Augmentation, Model Pruning....

An extended documentation on Processings creation is available here.

Processings

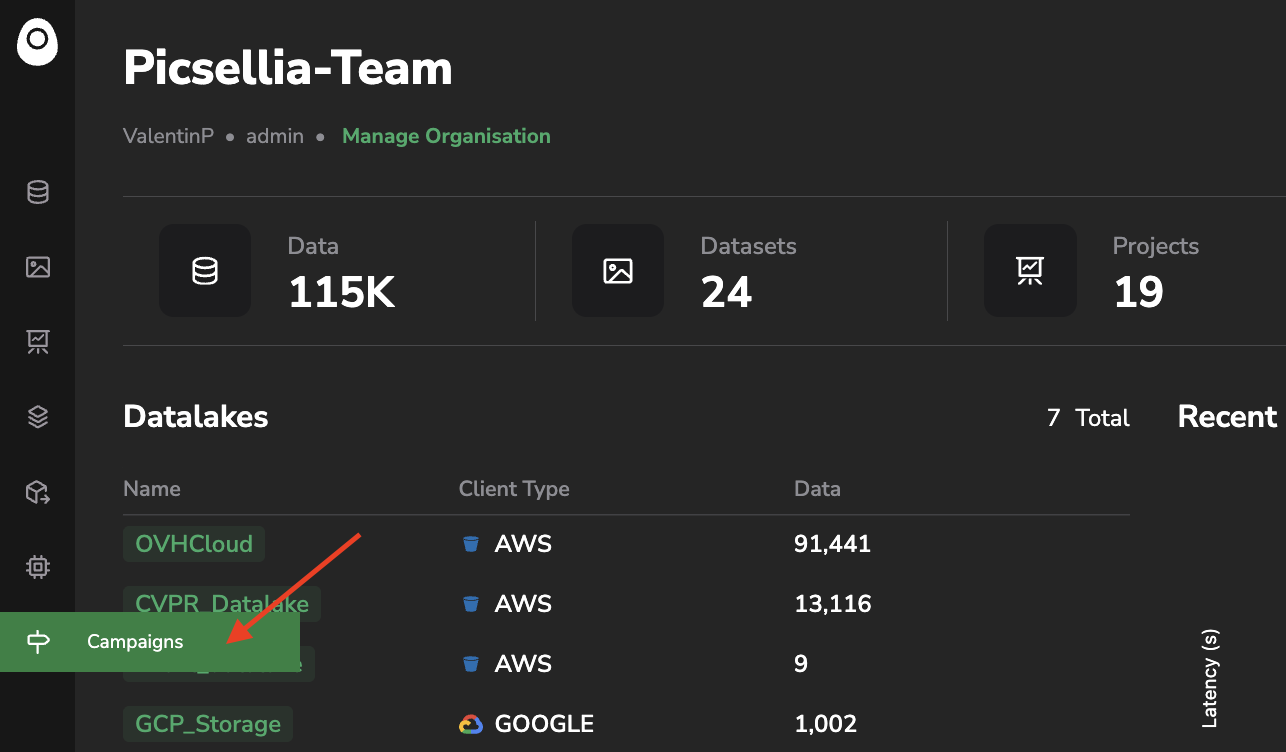

6. Campaigns Dashboard

Campaigns allows to structure the annotation or the review process on a DatasetVersion or a Deployment on Picsellia.

This page lists all the Campaigns related to your Organization, their status and the ongoing progress. The extended documentation related to the Annotation Campaign on DatasetVersion is available here and the one related to the Review Campaign on Deployment is available here.

The Campaigns Dashboard also provides aggregated metrics for all the users on all the Campaings related to your Organization.

Campaings

Updated 5 months ago