Experiment - Experiment overview

An Experiment is a Picsellia object that mainly aims at tracking and structuring the training of a ModelVersion.

Navigating among the tabs offered by a Picsellia Experiment you'll always be able to visualize on top the Experiment path that displays the current Project & Experiment names. This path also contains clickable links allowing you to navigate smoothly across Project & Experiment

Experiment Path

1. Logs

The Logs tab displays two main types of information:

- The

Experimentinformation in the header - The Metrics defined in the training script will allow the user to assess the quality of the training

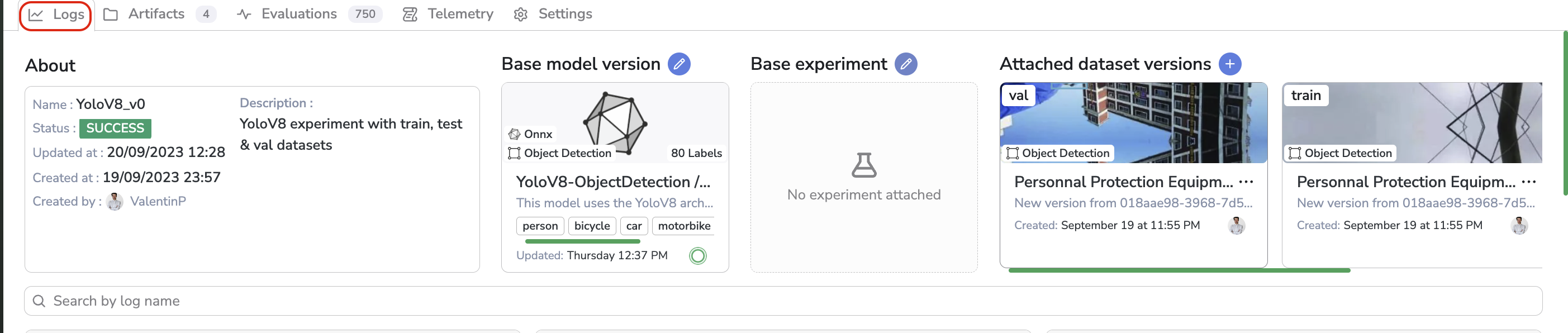

A. Header

The very first added value of the Picsellia Experiment system is to bring structure and traceability to your whole Organization. This is why, the header of the Logs tab, displays all the information related to the current Experiment allowing the user to get a complete understanding of this one.

Experiment header

Basically, the About section displays all the contextual information related to the Experiment such as its Name, Status, Description, Creation Date, and Creator. Then you can also retrieve the Base Model Version used if any, the Base Experiment used if any, and the DatasetVersion attached to the current Experiment with their aliases.

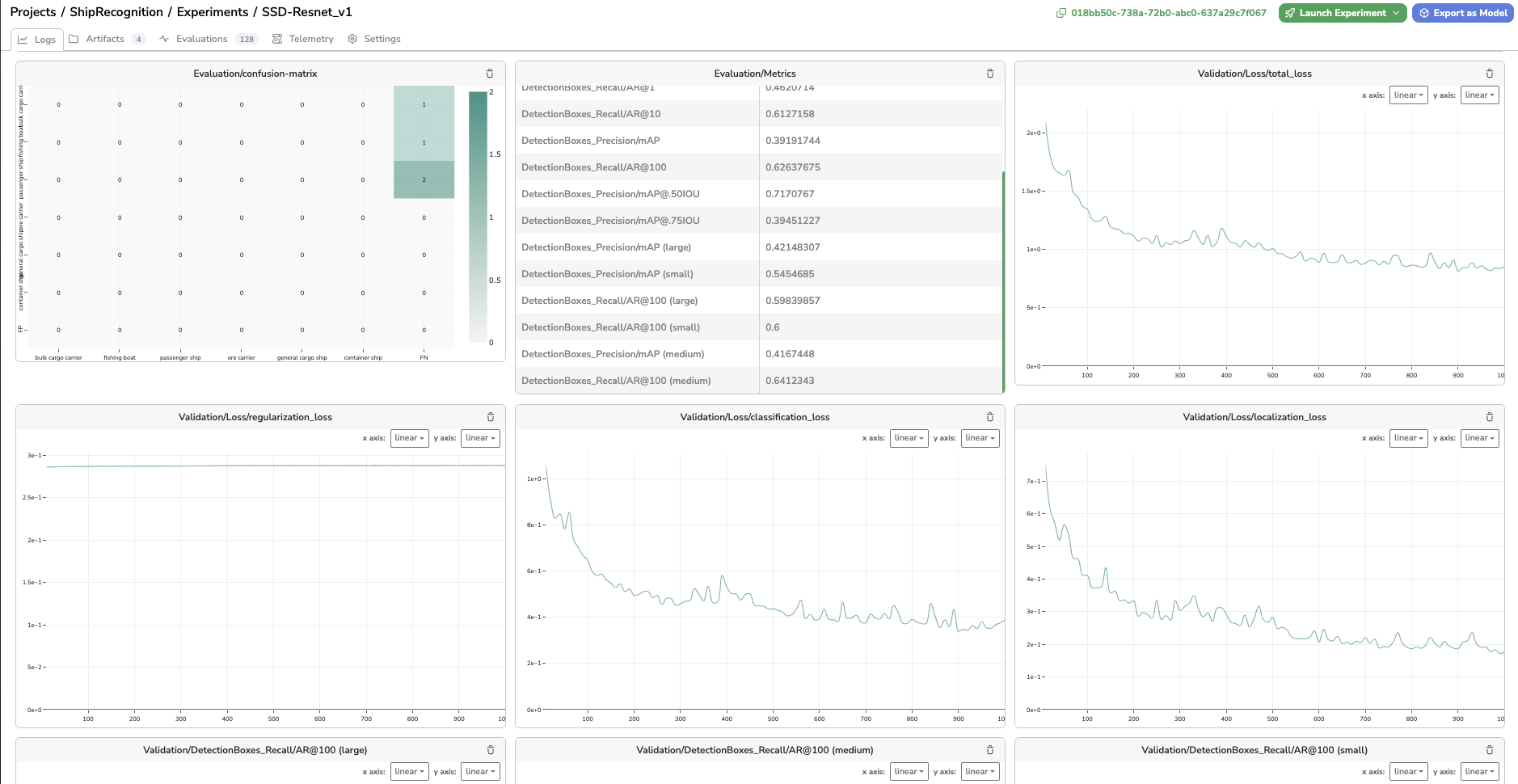

B. Metrics

Under the Header, you'll retrieve the Experiment Tracking dashboard which is composed of placeholders that are dedicated to the display of Metrics computed in the frame of the Experiment.

Obviously, those placeholders are supposed to be empty right after the Experiment creation as they will be filled by the training script as soon as the model training is launched.

Parameters andLabelMapThe only placeholders that can be create right after the

Experimentcreation are two tables displaying theLabelmapand the parameters with default values that are inherited if existing from the Base architecture selected for theExperiment.

The fact the Experiment Tracking dashboard is filled with Metrics by training script means that this dashboard is fully customizable. Indeed by integrating your own ModelVersion and training script into the Picsellia platform, you will have the opportunity to define the Metrics to be displayed in the Experiment Tracking dashboard for each Experiment using your training script as a Base Architecture.

Details about the Metric type available can be found here. Moreover, the process to integrate a ModelVersion & training script as a Picsellia ModelVersion is detailed here.

If you decide to use a Base Architecture, a ModelVersion from the Public Registry, the Metrics displayed in the Experiment Tracking dashboard at the end of the training will obviously be the ones defined by the Picsellia team while packaging the ModelVersion.

Below you will find, for instance, some Metrics logged in the Experiment Tracking dashboard of an Experiment using as Base Architecture, the ModelVersion ssd-resnet152-640-0 available in the Public Registry.

Some of the metrics logged using ssd-resnet152-640-0 ModelVersion as a Base Architecture

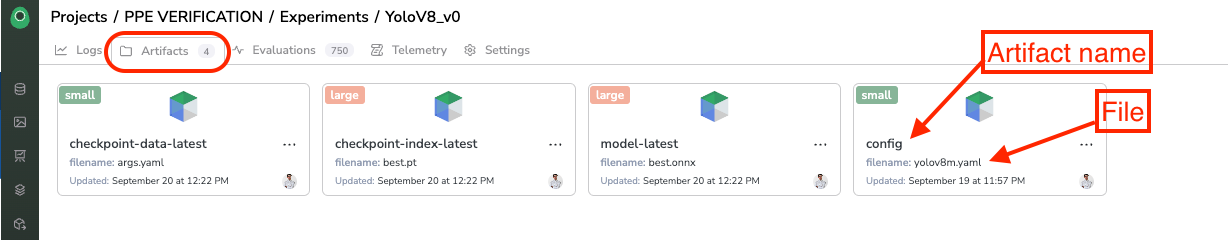

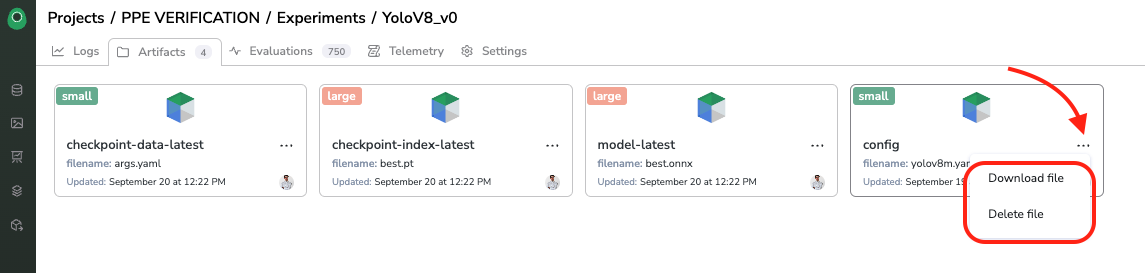

2. Artifacts

The Artifact tab is crucial because it stores all the files related to the Experiment that will define the ModelVersion we are aiming to create through the current Experiment.

Each file is named an Artifact and associated a file with an Artifact name.

Artifacts tab

First of all, it is important to know, that right after Experiment creation, the Model files of the Base Architecture will be inherited, if existing, as Experiment files under the Artifact tab.

Filename is different from Artifact nameEach Artifact has a name on the Picsellia platform, this name can be different from the name of the file it is storing. Indeed an

Experimentis a moving object, we know that for instance it can be initialized with the weights inherited from the Base architecture as Artifact but after the training those weights will be overwritten with a new weight file but under the same Artifact.

The Artifact tab allows you to visualize each Artifact attached to the Experiment, especially the Artifact name, and the filename of the Artifact. You can also use the ... button to delete the Artifact or download the associated file.

Download or Delete an Artifact

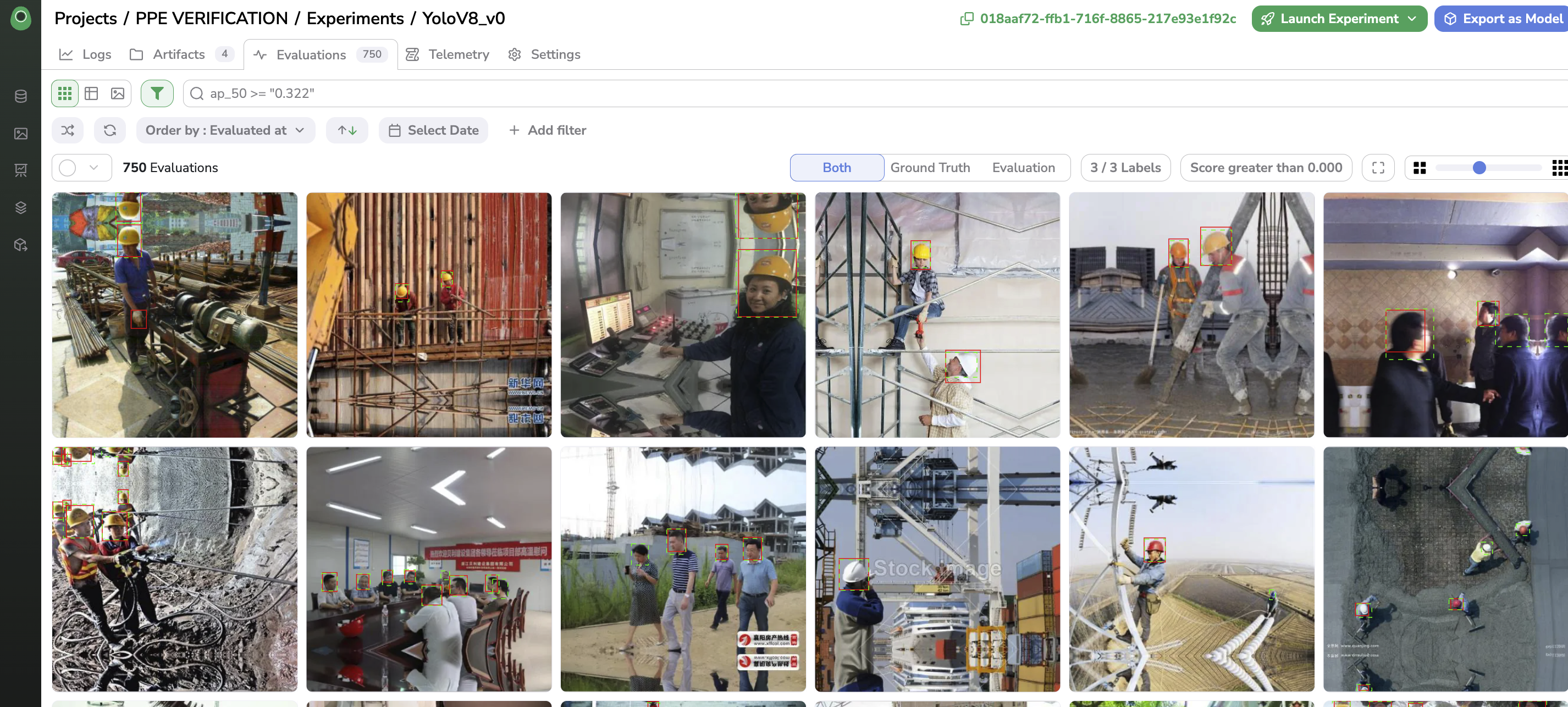

3. Evaluation

For each Experiment, you have access to a tab named Evaluation.

This Evaluation interface aims to allow users to visualize the Predictions performed by the freshly trained ModelVersion on a dedicated set of Asset and compare those Predictions with the GroundTruth. In addition, for each Asset evaluated, you will access metrics such as average recall and average precision to get a precise view of the ModelVersion performances.

Evaluation Interface

The computing and logging of Evaluation should be done by the training script after the training step. The selection of the Asset to use can be done by attaching to the Experiment a dedicated DatasetVersion for evaluation (for instance with the Alias eval as it is the case if you use ModelVersion from Public Registry as Base Architecture of your Experiment) or by the script itself among the DatasetVersion attached. Obviously in order to have a point of truth the Asset selected for evaluation should have been annotated in a DatasetVersion. More details about the Evaluation interface are available here.

In all cases, it is up to the script author to define the evaluation strategy (image selection, Evaluation logging, metrics computation...). A tutorial detailing how to integrate Evaluation in your training script is available here.

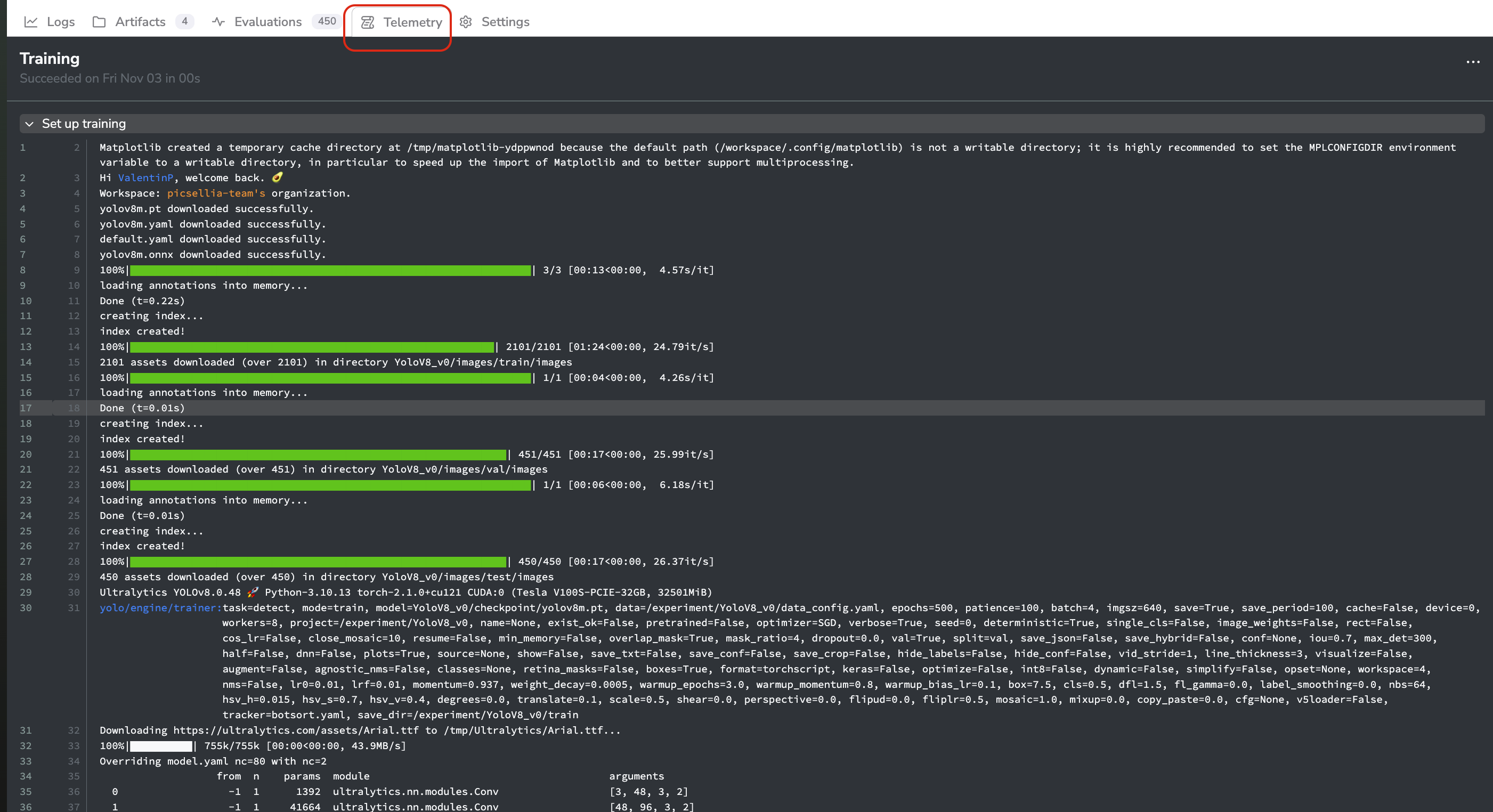

4. Telemetry

The Telemetry tab offers a way to visualize in real-time all the information logged by the training script during its execution. For sure, the script must log information and make it available in the Telemetry tab as explained here.

Telemetry tab

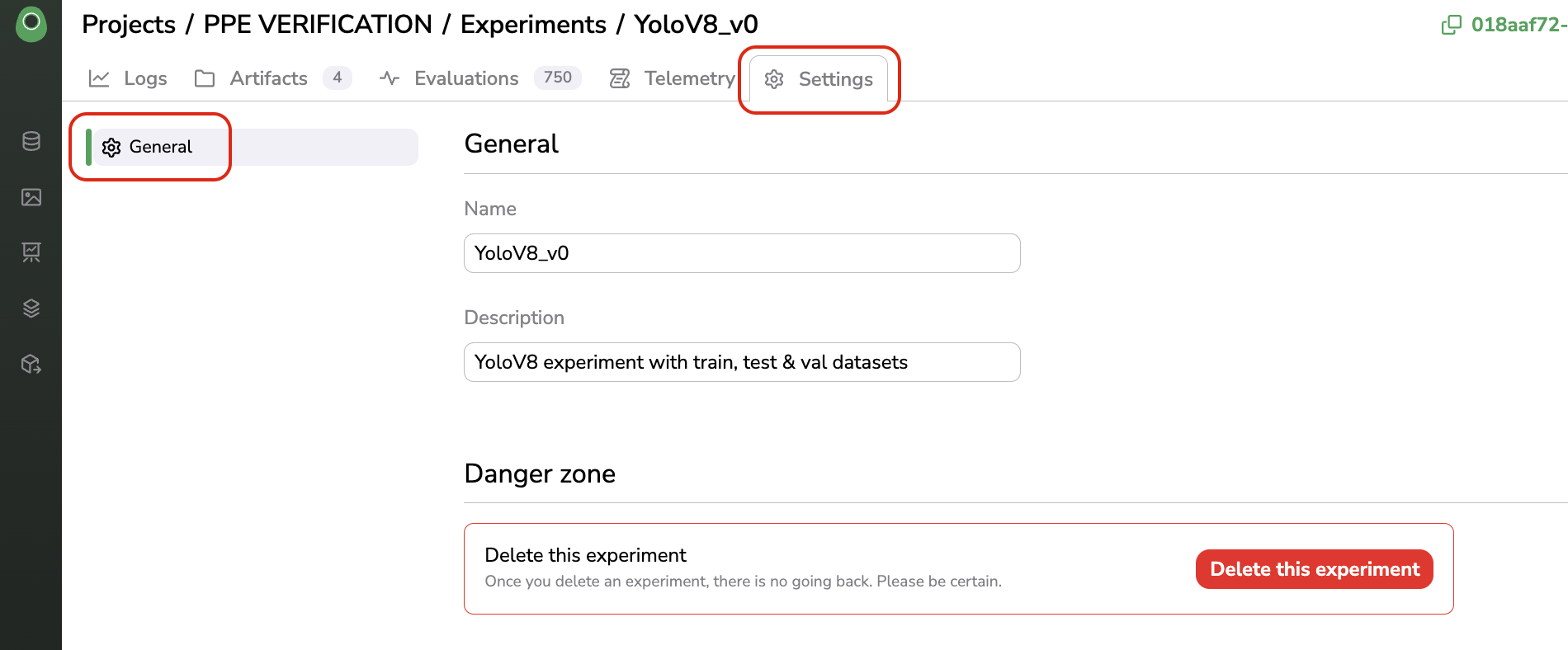

5. Settings

Each Experiment also has its own Settings tab allowing users with sufficient permissions to update the Experiment name or description and delete the current Experiment.

Settings tab

Updated 8 months ago