Datalake - Processings

1. What is a Processing?

Processing?A Processing is a piece of code (like a Python script) executed on Picsellia infrastructure that can interact with any object related to your Organization (Datalake, DatasetVersion, ModelVersion..).

This page details how to use aProcessing on aDatalake

The following pages are dedicated to the use of DatasetVersion Processing and ModelVersion Processing.

To explain this, let's start with a use case:

Let's say you want to perform data augmentation on a Picsellia Datalake.

Normally, the steps to achieve this would be:

- Downloading your images locally

- Running a script with some data-augmentation techniques (like rotating the image for example) on all of your images

- Uploading the augmented images to this new

Datalake

We know it can feel a little bit overwhelming. Although running a script can be considered an automatic task, this process is fully manual. In addition, you must be using a computer that is able to run the code (it has to be in the correct environment, etc...)

This is why we came up with Processing, to let you automate this process and launch it whenever you want, on the data you want, directly from the platform!

So let's see how to use the most common Processing, the auto-tagging of a Datalake.

AProcessing can be run on a Datalake, so you can perform tasks like:

- Auto-tagging of Data

- Data Augmentation

- Smart Dataset creation

- Metadata auto-completion

- ....

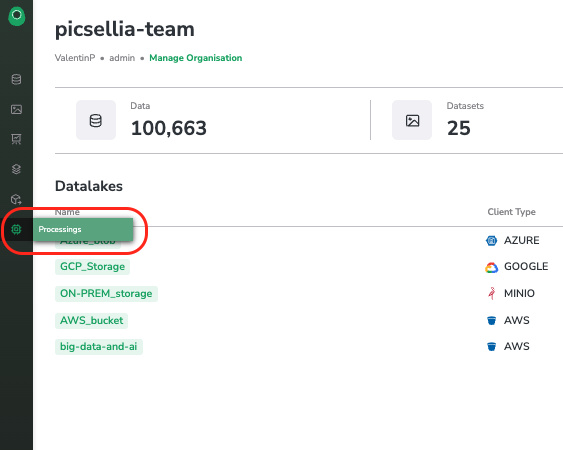

2. Access the Processing list

Processing listFirst of all, you can at any moment reach the list of available Processing by clicking on Processings on the Navigation bar as shown below:

Access Processings

This page provides access to all the Processing available for the current Organization.

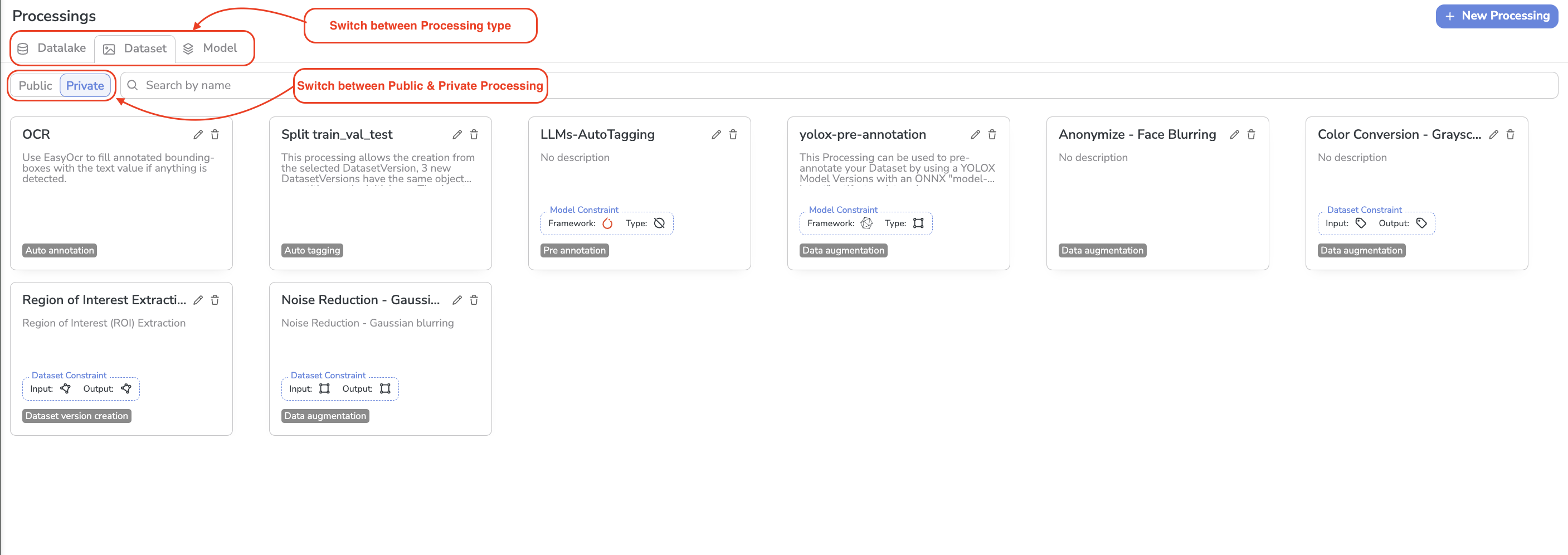

They are sorted by type of object they can be used on, meaning:

DatalakeDatasetVersionModelVersion

For each type of Processing, you will have access to all the Processing created by the Picsellia DataScience team (called Public Porcessings) alongside the ones you created (called Private Porcessings).

Processing list

On this view, for each Processing, is displayed its Name, Description, Task, and potential constraints.

It is also from this view that you can create or update any type of Private Processing. More details on this topic are available here.

3. Use a Processing on a Datalake

Processing on a DatalakeNow that we can easily list the available Processing it is high time to use them. In our case, we will use one on a Datalake.

Let's see one of the most useful examples: The auto-tagging of a Datalake with a CLIP ModelVersion stored in your Model Registry.

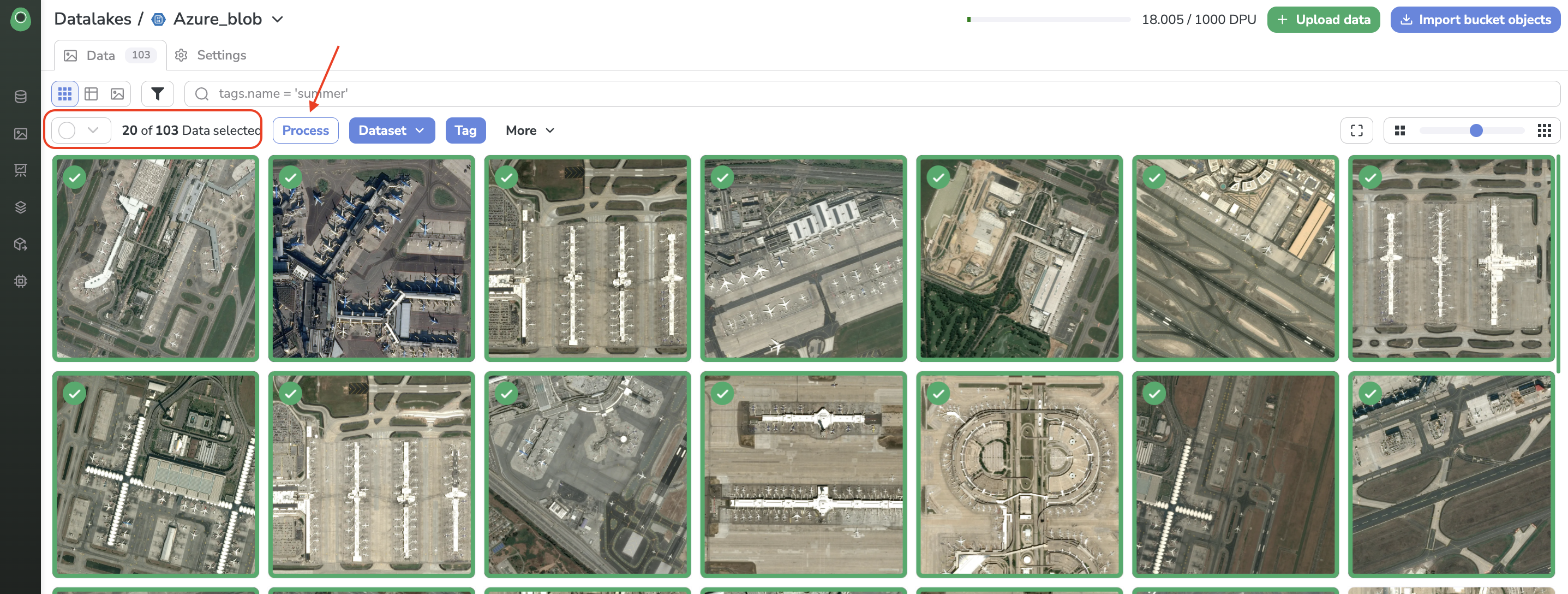

First of all, let's access the Datalake to which the Processing should be applied to.

Then, select all the Data that will be impacted by the Processing and click on process as shown below:

Process a Datalake

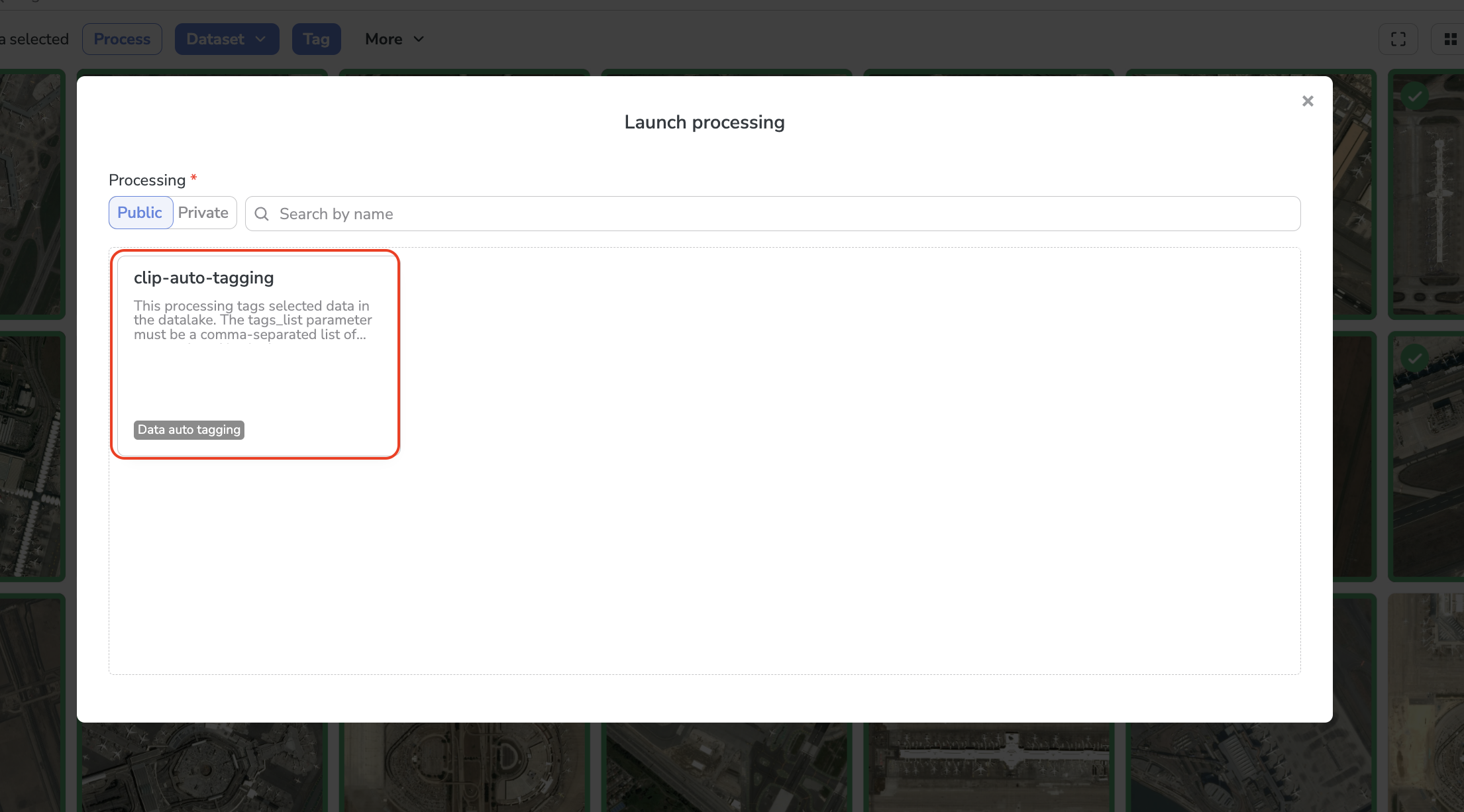

A modal will then open letting you choose among the Public and Private Processing, the one to be executed on the current Datalake.

Depending on the Processing task you might be requested to prompt some inputs to make sure the Processing can be executed as expected. These inputs can be parameters, the name of the new Datalake or the selection of a ModelVersion for instance.

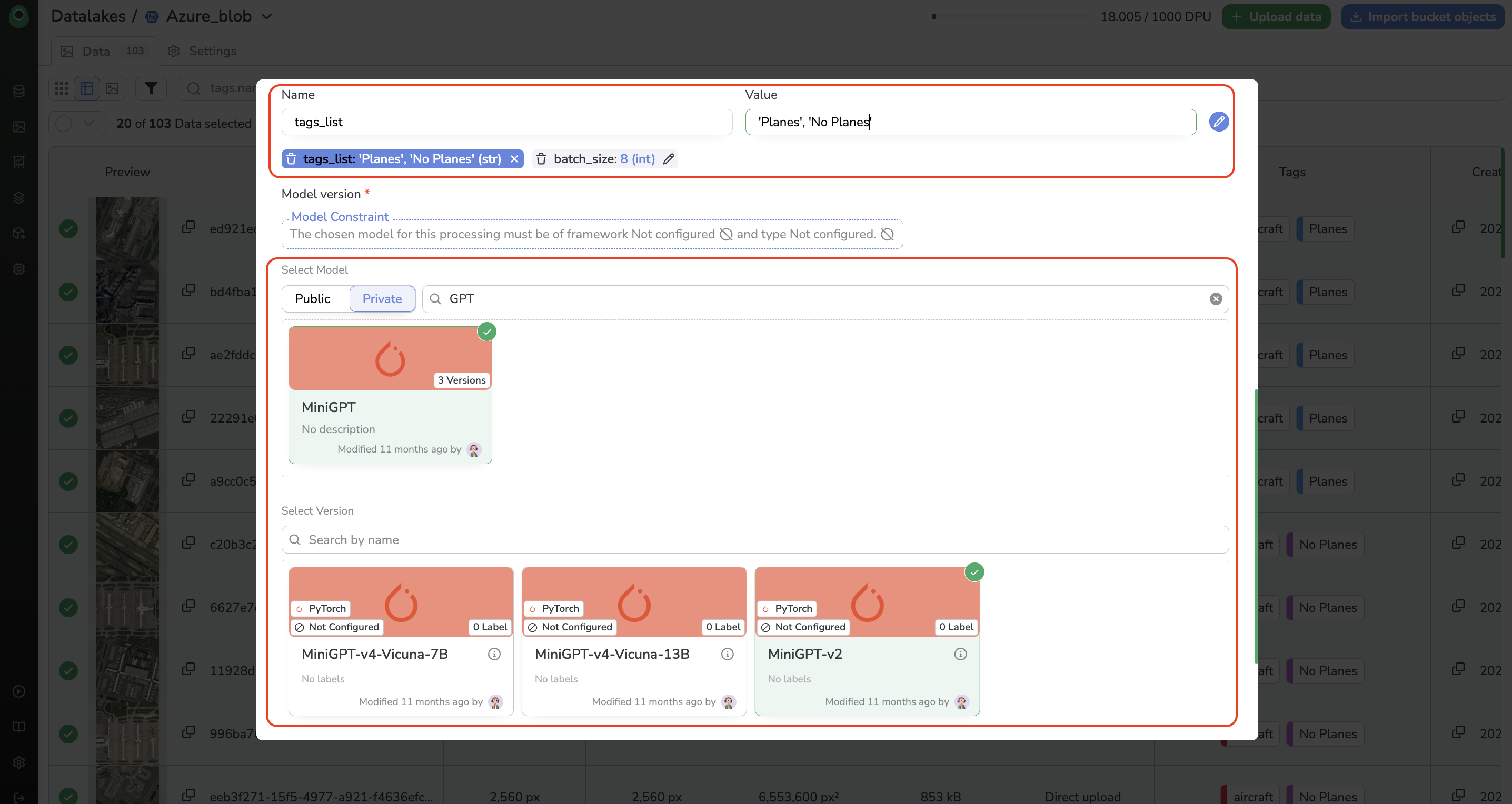

In the frame of our Auto-tagging use case, we will select the clip-auto-tagging Processing available to everyone as a Public Processing:

Auto-tagging Processing selection

Read theProcessingdescriptionAs already mentioned, each

Processinghas to be used in a specific way, it might require the user to prompt some parameters,ModelVersionorDatasetVersionname for example. Usually, theProcessingdescription provides all the information to properly configure it and avoid the execution to fall into error.

Once the Processing is selected, the modal will extend allowing you to define the execution parameters, in this case, the batch_size & tags_list, and select the ModelVersion that will be used to auto-tag the current Datalake.

Parameters defintion and ModelVersion selection

More details on thisProcessingThis particular

Processingwill use the selectedModelVersion(in this case, a large model such as MiniGPT), which will attach aTagto eachDatain yourDatalakeselected among the list of potential tags listed in the parameter tags_list.

Please note that a Processing flagged with Auto-Tagging as Task can embed constraints on the ModelVersion type &framework constraints. As a consequence, only ModelVersion that satisfies these constraints will be available for selection in the Processing selection modal.

Once everything is properly set you can launch the Processing execution by clicking on Launch, the Docker image associated will then be executed on Picsellia's infrastructure according to the configuration defined.

To create your own Processing on the Picsellia platform you can rely on the documentation available here.

4. Track the Processing progress

Processing progressWhen you launch a Processing, it creates a Job running in the background. You can access the status and many more information about it in the Jobs tab.it should be

Reach Jobs tab

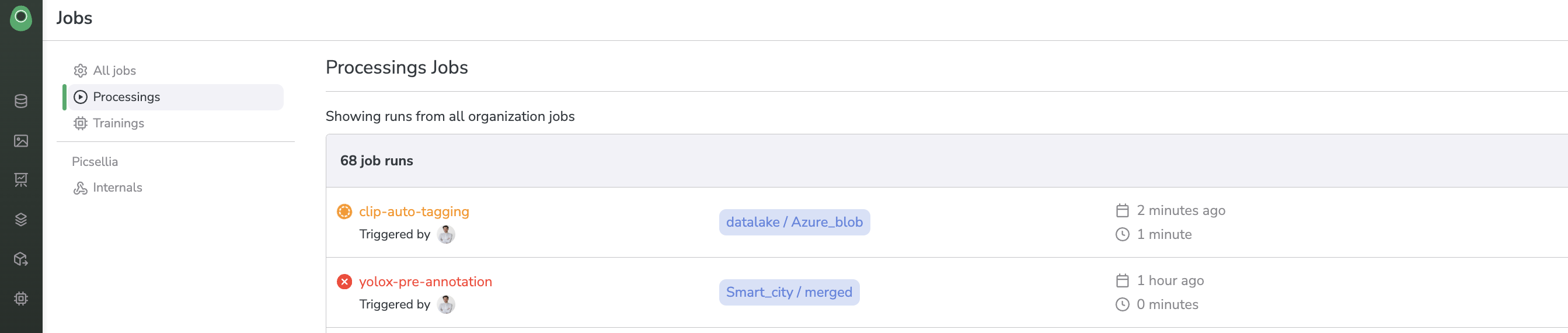

On this page, you can see the history of all the Job that ran or are currently running.

Jobs overview

If you just launched a Processing, you should see it at the top of the list. Let's inspect our freshly launched auto-tagging Job.

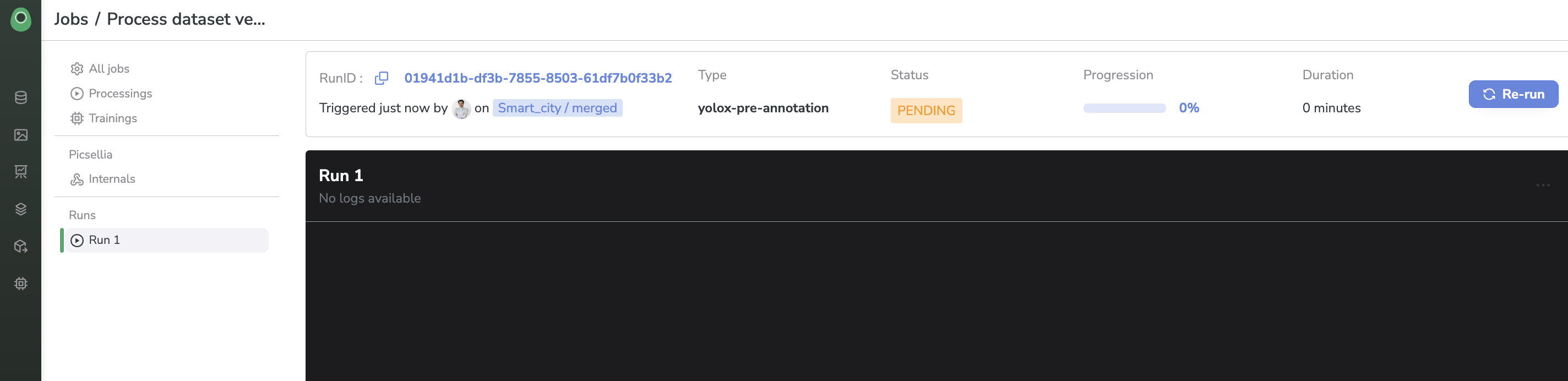

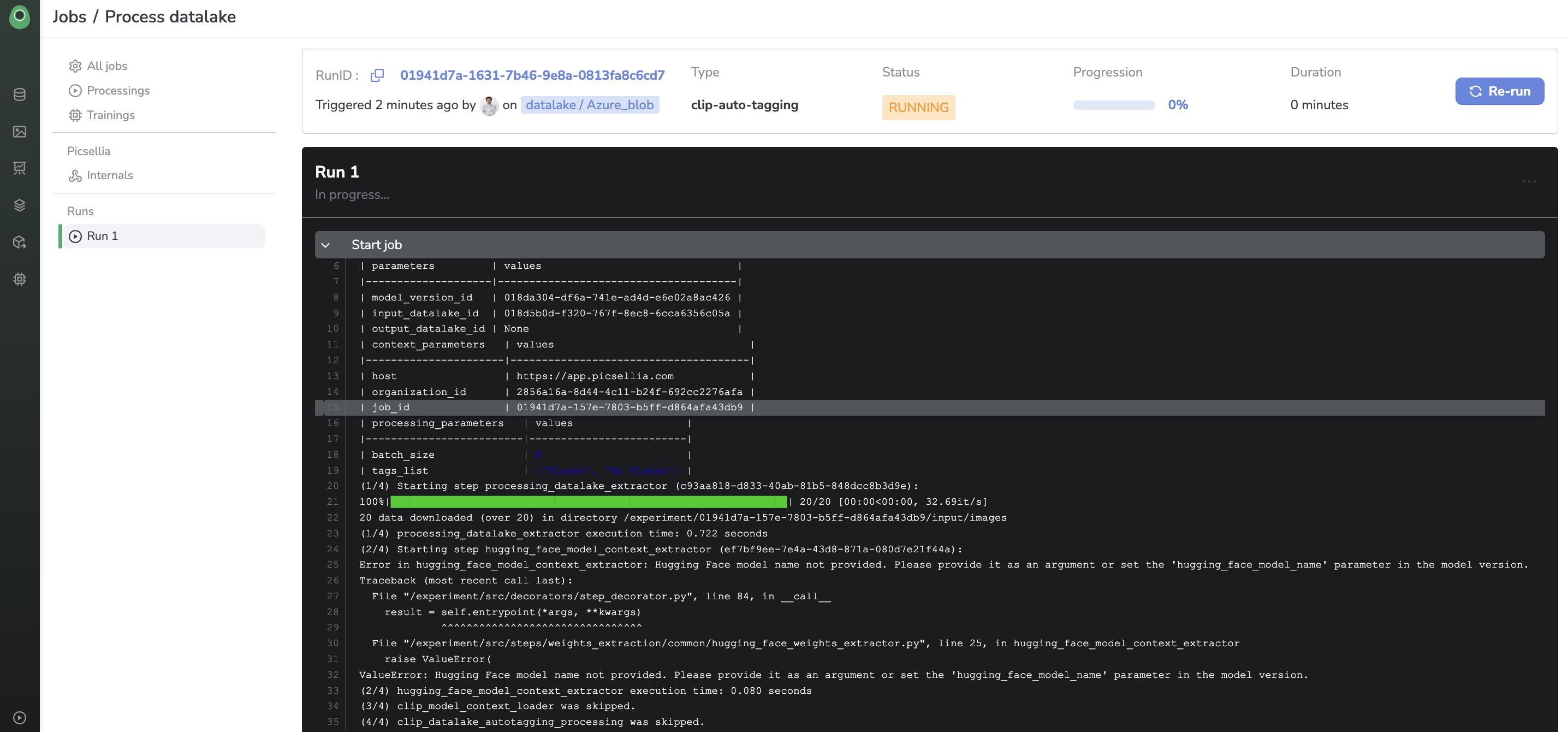

Job logs and status

When you launch a Processing, there will be a short moment when the status will be pending. Once your Job has been scheduled (and you start being billed), the status will change to running and you will see some logs being displayed in real-time (those come from the stdout of the server it runs on)

Job logs and status

This way, you can really track the progress and the status of your Job and check that everything is going well. In addition, it is a way to keep track of any performed action on a Datalake, such as what Processing has been executed. When ? and by who?

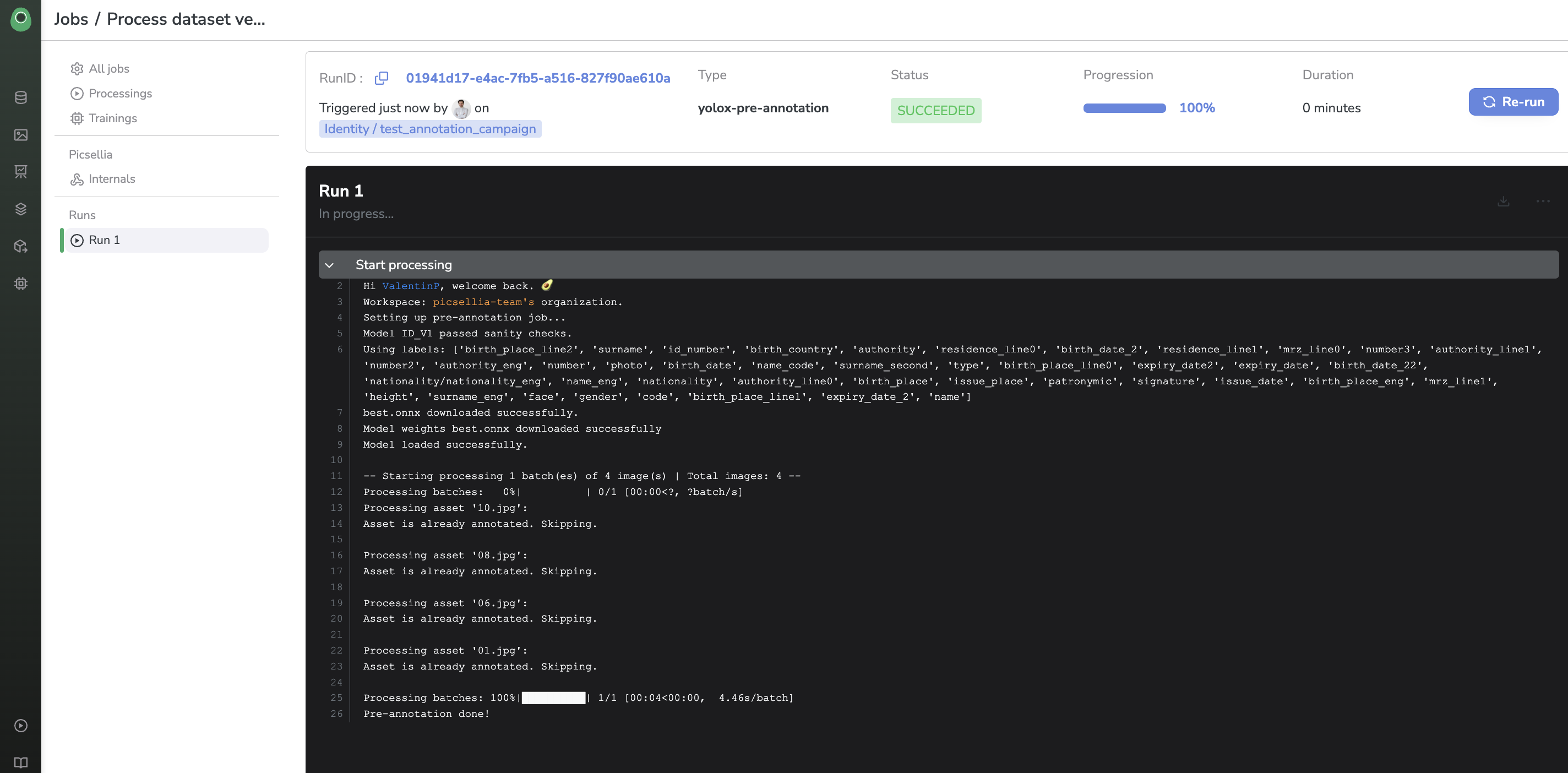

Once your Job is over, you will have access to the full history of logs, and the total running time, and the status will switch to succeeded (or failed, if there were issues at runtime).

Job logs ans status

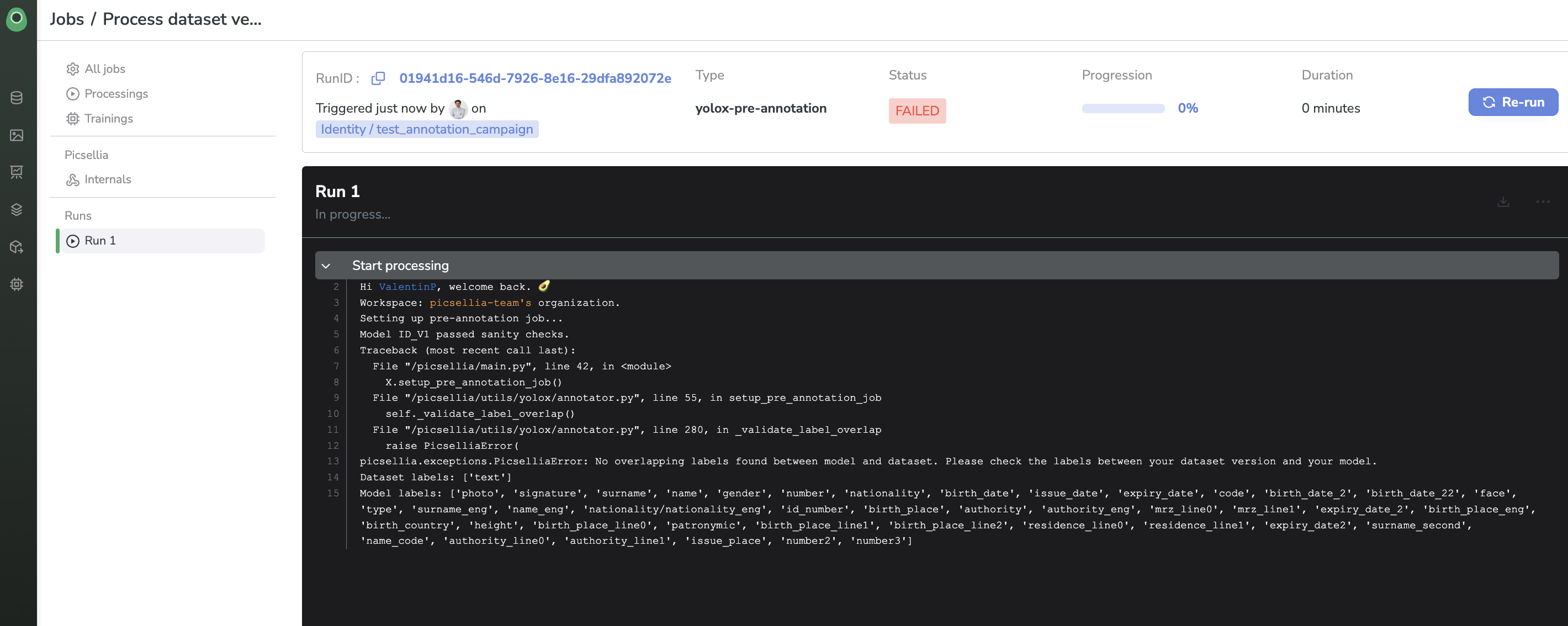

Your Job will fail sometimes, but you'll be able to find the issue thanks to the stack trace in the Job logs:

Job stack trace in case of failure

Once you have detected the issue, you have fixed it, and you have updated your Processing's Docker Image, you can click on the Re-run Job button. This will create and launch a second run just like the one on the left of the screen.

Jobruns

Re-run JobYou can retry your Job as many times as you want, as long as there is no active run (meaning no run in the pending or running

Job)

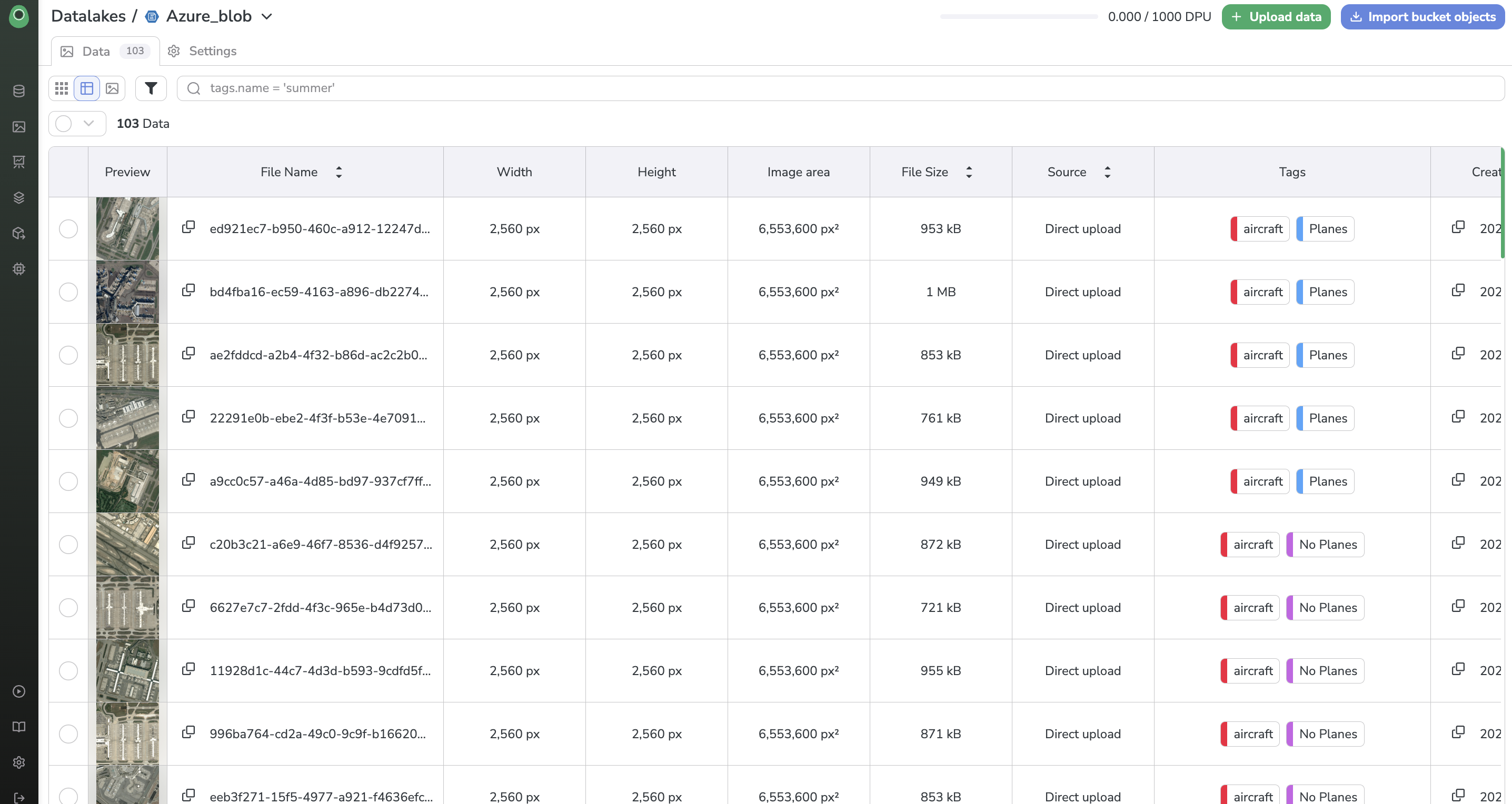

Now that our job has finished, let's have a look at our Datalake! It should be fully tagged with the defined Tag.

Auto-tagged Datalake

Our Datalake has been nicely auto-tagged by our ModelVersion with barely any effort. That's the power of Datalake Processings on Picsellia.

If you want to create your ownProcessingyou can follow this guide.

Updated 8 months ago